Accelerating Deep Learning in the Post-Moore's Law World

In the world where transistors are not free anymore, how do you continue to accelerate deep learning?

Arguably, one of the most important tailwinds behind the advent of deep learning over the last decade is the availability of faster and cheaper computing power. To put things into perspective, the year in which the backpropagation paper [1] was written (1986), the then fastest supercomputer (Cray X-MP/48) had a peak performance of 800 MFLOPS, cost $15M to build and needed 345kW of power to operate. Today, you can buy a Raspberry Pi 4 that fits in your palm with a peak performance of 13.5 GFLOPS, costs $35, and requires 6W of power to operate at peak load. In other words, a similar amount of performance is delivered almost a million times cheaper and more efficiently. This unbelievable scaling has been possible by a phenomenon that Gordon E Moore predicted in 1965 — the number of transistors on integrated circuits will double every two years — that afforded us 2x free transistors every two years. This helped us drive performance up and costs down, leading to processors that could train deep neural networks with a lot of data in acceptable time and cost.

Given that the peak compute performance had a strong influence on the pace of innovation in deep learning, we must ask ourselves the question: how has the computing trend fared after the modern deep learning revolution that started in 2012?

Growing Performance Deficit

To dig deeper into the growing compute requirements of modern deep learning algorithms, the graph above plots the relative increase in total compute needed to train deep learning algorithms, along with the increase in peak performance of modern processors and accelerators since 2012. It is exceedingly clear that there is an exponentially growing performance deficit between the supply and demand of computing performance. Let us analyze each trend we see here in detail.

1. Compute for Training Deep Learning Models is Growing at Breakneck Speeds

From AlexNet to today’s large language models, the compute needed to train deep learning models have grown over 2 million times! Compared to that, by Moore’s law, computers can only have gotten 100 times faster, which is a mere 0.005% of the growth of compute demands of deep learning algorithms. This is unsustainable and potentially will be the bottleneck for us in continuing to innovate further in deep learning.

Why is the computing demand growing this quickly? The performance of large language models has a power-law relationship with the number of parameters in the model, and the size of the dataset [2], both of which are directly proportional to the total compute needed to train these models[3].

2. We are already in the post-Moore’s Era of Computing

Despite many claims about whether Moore’s law is dead or not, it is clear from the below figure that we are already in the post-Moore’s era of computing. The FP32 compute is growing slower than Moore’s scaling. Note that this is even pronounced given that these processors have had several architectural enhancements that contributed to performance improvement independent of Moore’s scaling.

The most important distinction of the post-Moore’s law era is that the extra transistors that we are accustomed to using to get more performance out of processors will not come for free. With the demise of Dennard scaling, we also end up paying for extra transistors in terms of cost, power, and chip yield. This brings up the question, how do we judiciously use these extra transistors to get the most performance out of deep learning processors?

3. Hardware Specialization is not the only Answer

Computer architects have strongly relied on specialization to navigate the treacherous waters of the post-Moore’s and post-Dennard scaling world. From Tensor Cores in NVIDIA’s GPUs to TPUs from Google to numerous startups that are developing domain-specific accelerators for deep learning are hoping to bridge the performance deficit.

However, there are two worrying trends, as observed from the figure above. First, specialization has given us a “one-time” boost over their general-purpose counterparts. Prior literature has dubbed this as the “accelerator wall” [8]. Second, the rate of increase in compute throughput of specialized accelerators is also limited by a slowing Moore’s law. Therefore, specialization is not the singular answer for accelerating deep learning in the post-Moore’s era of computing.

Two more considerations are of concern:

Performance numbers on the chart for specialization here are very optimistic. It assumes a 100% utilization of all the compute available on-chip, while it is much lower in practice [4]. This is often due to the limited memory bandwidth/latency (a topic that requires a separate discussion) and inefficient mapping of workloads due to varying shapes of deep neural network layers [5].

Emerging deep learning workloads have different compute patterns. For example, in the case of Recommendation Systems, the most dominant deep-learning workload in Facebook’s datacentres, performance is often limited in the embedding table access rather than deep neural networks [6]. Graph neural networks are another example where bottlenecks may not be tensor-based operations like Matrix Multiplication (which is often easier to accelerate).

Summary

Let us summarize the above discussion. We discussed four important trends that we must be aware of:

The pace of innovation in deep learning is influenced by the availability of faster and cheaper computing power.

The compute demand for training deep learning models is growing at breakneck speeds and is expected to continue to grow exponentially.

We are in the post-Moore’s era of computing, and the extra transistors that we are

accustomed to using to get more performance out of processors will not come for free.Hardware specialization is not the only answer to accelerating deep learning in the post-Moore’s era of computing as they are also limited by a slowing Moore’s law.

Therefore, we need to rethink how we can continue to accelerate deep learning in the

presence of slowing Moore’s law and diminishing returns of specialization.

How do we cater to the increasing performance demand of deep learning with slowing Moore’s law and diminishing returns from specialization?

Outpacing Moore’s Law: A Codesign Approach

There are two key insights that can help us address the above challenge.

Limits of hardware specialization can be broken by expanding the scope of specialization beyond hardware. Specifically, by carefully codesigning and specializing multiple layers of the deep learning stack, we can squeeze more performance out of the same hardware that did not have any further room for specialization.

Unlike scaling the number of cores, which linearly grows the transistor count, adding additional specialization to an existing architecture has minimal (or zero) area overheads. Therefore, even in a world where transistors are not free, it is possible to continue on the journey of specialization.

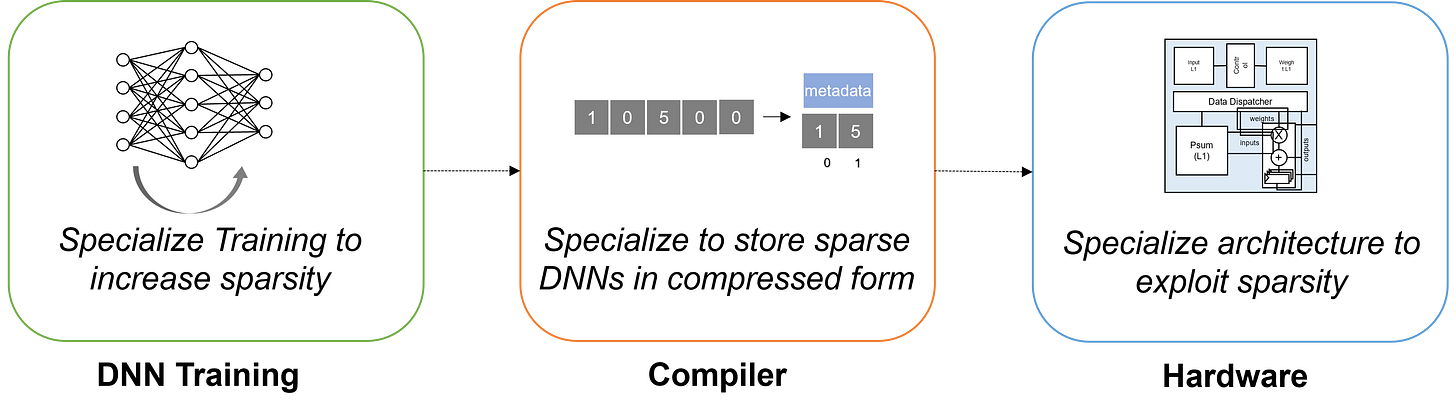

The above figure depicts an example. By specializing training, compiler, and hardware in tandem, we can exploit sparsity (presence of zeros in data) to improve the performance of deep learning architectures. More importantly, this adds minimal additional transistors (e.g., 5% [9]).

Codesigning Across the Deep Learning Stack

In the post-Moore’s law paradigm, we are required to improve performance in a fixed transistor budget, as adding any additional FLOP (floating-point operations)/memory to the chip increases costs. We have two possible directions to tackle this problem:

Reduce the amount of compute to achieve the same result (e.g., a smaller DNN)

Increase compute (FLOP) performed per transistor (e.g., lower precision arithmetic)

The figure above depicts both directions and related optimization techniques. Most of these techniques interact with more than one layer of the deep learning stack, underlining the importance of codesign in outpacing Moore’s scaling. The rest of the article throws light on some of these approaches. Note that this list is by no means exhaustive.

Thrust 1: Reducing Compute Requirements While Achieving the Same Objectives

How do we achieve similar accuracy and objectives while requiring fewer FLOP for training and inference? Here are several approaches synergistic with that thrust.

Neural Architecture Search (NAS) searches for a DNN architecture that tries to maximize the objective (e.g., accuracy). Prior works have successfully demonstrated that it is possible to frame the objective function to improve accuracy while decreasing the FLOP [7].

Compute Efficient Learning Algorithms try to converge in fewer epochs, thereby reducing the total training FLOPS. Improved learning rate scheduling techniques and better ways to parallelize stochastic gradient descent are some examples.

DNNs have Redundancy: Pruning harnesses the overparameterization and redundancies of deep neural networks to prune ineffective weights and connections, leading to sparse DNNs. Sparsity can then be exploited by specialized hardware (x*0=0) to effectively avoid ineffective computations and save memory bandwidth by not fetching operands related to ineffective computations. There is rich literature in this direction; however, effectively harnessing unstructured sparsity is still an open problem.

Compute Reuse: Due to repetitions in inputs (videos have similar frames repeated) and weights [12], some computations can be reused by algebraic reassociations (e.g., factorization). Such repetitions are widely found due to decreasing precision (e.g., 8-bit inference), which leads to fewer unique values. Such reassociations can reduce overall compute requirements without harming the accuracy of the underlying model.

Thrust 2: Increasing the Amount of Compute Performed Per Transistor

Another approach is to increase the amount of work done (FLOP) per transistor. Below are some techniques in that direction.

Quantization. A rich line of work explores reducing the bit precision of weight parameters (e.g., 8-bit instead of 32-bit float). Effectively, you can perform ~4x more computations with the same amount of transistors if you reduce precision from 32- to 8-bit precision.

Flexible Accelerators. Due to the varying shapes of DNNs, utilization of compute resources (e.g., on-chip memory) is often sub-optimal [10]. A way to address this is to impact flexibility to DNN accelerators to allow flexible mapping of workloads on the accelerator. For example, the block size of tensors can be varied for different layers based on the architecture and DNN parameters [5]. Imparting flexibility can significantly increase the utilization of resources, improving compute/transistor.

Mapping Space Search. An extension of the above discussion is about finding the best way to map the workload to architecture. Prior works have shown how even the top 5% of the mappings can vary up to 19x in execution efficiency [11]. This is a notoriously difficult problem to solve since the size of the map space can grow exponentially with the number of dimensions of flexibility. For example, the map space of a typical accelerator has more mappings than the number of stars in the Universe! AutoML is a very promising way to solve this [5].

Compiler Optimizations. There is a lot of scope for compiler optimizations, at both intra-operator and inter-operator levels, to improve the utilization of resources in DNN accelerators. For example, graph placement on compute clusters considering a special arrangement of compute nodes can lead to better data locality and resource utilization.

Looking Forward

While the slowdown in Moore’s scaling and decreasing returns on investment on specialization pose challenges in the computing landscape for deep learning, there is a ray of hope with codesign across different parts of the deep learning stack. In fact, this has been happening in the industry over the last few years, as depicted in the figure below. Using codesign techniques and expanding specialization beyond the hardware layer, performance improvement of deep learning algorithms has far outpaced Moore’s scaling.

Going forward, there are still multiple avenues to continuing codesign and specialization across different layers of the deep learning stack that will continue to power the growth in compute throughput. However, it is clear that we must adopt the mantra of “data- and compute-efficient learning” to ensure a more sustainable future.

References

[1] Rumelhart, David E., Geoffrey E. Hinton, and Ronald J. Williams. "Learning representations by back-propagating errors." nature 323.6088 (1986): 533-536.

[2] Kaplan, Jared, et al. "Scaling laws for neural language models." arXiv preprint arXiv:2001.08361 (2020).

[3] Narayanan, Deepak, et al. "Efficient large-scale language model training on gpu clusters using megatron-lm." Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis. 2021.

[4] https://openai.com/blog/ai-and-compute/

[5] Hegde, Kartik, et al. "Mind mappings: enabling efficient algorithm-accelerator mapping space search." Proceedings of the 26th ACM International Conference on Architectural Support for Programming Languages and Operating Systems. 2021.

[6] Naumov, Maxim, et al. "Deep learning recommendation model for personalization and recommendation systems." arXiv preprint arXiv:1906.00091 (2019).

[7] Wu, Bichen, et al. "Fbnet: Hardware-aware efficient convnet design via differentiable neural architecture search." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2019.

[8] Fuchs, Adi, and David Wentzlaff. "The accelerator wall: Limits of chip specialization." 2019 IEEE International Symposium on High Performance Computer Architecture (HPCA). IEEE, 2019.

[9] Hegde, Kartik, et al. "Extensor: An accelerator for sparse tensor algebra." Proceedings of the 52nd Annual IEEE/ACM International Symposium on Microarchitecture. 2019.

[10] Jouppi, Norman P., et al. "In-datacenter performance analysis of a tensor processing unit." Proceedings of the 44th annual international symposium on computer architecture. 2017.

[11] Parashar, Angshuman, et al. "Timeloop: A systematic approach to dnn accelerator evaluation." 2019 IEEE international symposium on performance analysis of systems and software (ISPASS). IEEE, 2019.

[12] Hegde, Kartik, et al. "UCNN: Exploiting computational reuse in deep neural networks via weight repetition." 2018 ACM/IEEE 45th Annual International Symposium on Computer Architecture (ISCA). IEEE, 2018.